Distributed Architecture Overview

Atoti leverages multicore CPU architectures extremely efficiently. The performance obtained from server CPUs with a large number of cores and large amounts of memory available to the JVM, together with the capability to swap part of the memory on disk, has allowed Atoti to successfully deliver outstanding performance to almost all business cases of its client base.

However, some use cases may benefit from having an architecture that supports multiple servers, spread across a local area network or a wide area network to deliver accrued performance and improve resilience.

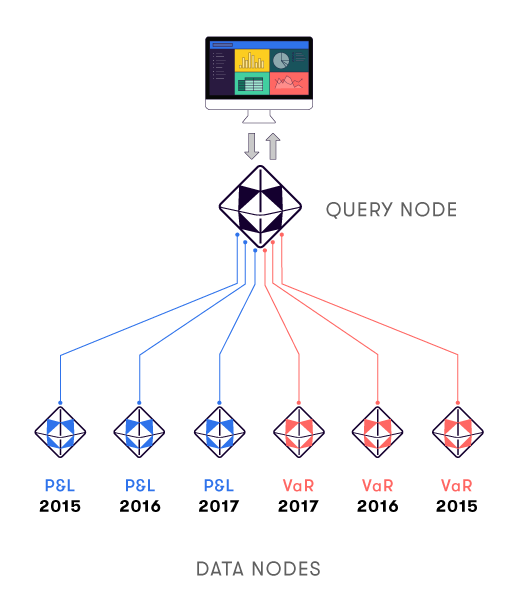

Atoti can run as a distributed cluster on many machines. On each node of the cluster, two kinds of cubes can be run:

- Data nodes, which contain data cubes

- Query nodes, which contain query cubes

Query cubes

Query cubes offer a view of the cluster to the UI, and are the only ones that can receive queries across the entire cluster.

A query cube does not store any data in its datastore: when queried, it forwards the query to all data cubes in the cluster, performs the final steps of aggregation/post-processing, and returns the result to the user.

To be able to perform queries, a query cube does store one thing: the global cluster hierarchies, that is, all the members of all the hierarchies of all the data cubes. This means that the query cube's topology represents a merger of the topologies of all the corresponding data cubes.

For details about query execution and cluster creation, see the Communication flow topic.

Data cubes

Data cubes are the ones storing the data: their datastore is full of facts.

They also define their own hierarchies over those facts, and their own measures. So the data cubes

in a cluster can be very different from each other, as long as the query cubes are aware of the

differences.

Applications

Applications in Atoti distribution, are the concept used to group data cubes having the same topology (i.e. same hierarchies, levels and measures) together. They can be seen as a set of identical cubes from the topology point of view. Yet, these cubes can have different data. For instance, one can have a cube with only data of the EU zone and another one for the US zone.

Cubes within an application form what we call horizontal distribution, as opposed to polymorphic distribution, which is the grouping of applications with different topologies at the query cube level.

For instance, one can have an application for Sensitivity, one for P&L and one for VaR: each application has specific cubes that compute the measures very quickly and only store the data relevant to their measure. But the query cube provides a consolidated Risk/PnL view, the trade identifier being a common identifier allowing to "glue" the measures together.

Two different applications cannot share the same measures.

Clusters

A cluster is a group of data cubes and query cubes that can communicate together.

Scalability

All data cubes that belong to the same application can share the same measure definitions, and their underlying data will be aggregated together. This corresponds to the idea of horizontal scaling.

Data cubes that belong to different applications MUST NOT share any measure definitions (except for the native contributors.COUNT and update.TIMESTAMP). By splitting strictly disjoint measures, and their associated data across multiple servers, it is possible to achieve a polymorphic distribution.

Polymorphic distributions are useful when measures do not apply across all dimensions. In this case, you can split the dimensions into smaller cubes, each with its own consistency, while keeping a comprehensive view of all available data within the query cubes, which are aware of the different applications.

It is therefore possible to have a combination of polymorphic and horizontal distributions within the same cluster using the application abstraction.

This is achieved in the Query Cube builders by specifying several applications, each with its own

distributing levels (see below).

When receiving the contributions to the Query Cube's hierarchies from a specific data node, we try

to merge dimensions, hierarchies and levels when they have the same unique name (the unique name is

the path to a specific level: for instance, the level Year's unique name could look like

[TimeDimension].[TimeHierarchy].[Year]). This should feel natural to the end user.

Two hierarchies with the same name, but in different dimensions, will never be merged.

If Application1 has more levels in a specific hierarchy than Application2, the levels that can be merged will be, and the remaining levels will only appear for Application1.

For instance, three data nodes from a P&L application, distributed along a year field, and three data nodes from a VaR application, distributed along a year field, cohabit in the same cluster and can be queried from one query node.

For tips on configuration, see Recommendations.

Distributing levels

Distributing levels partition the data within an application. Here is a definition example:

return StartBuilding.cube(CUBE_NAME)

.withCalculations(

c -> {

Copper.count().withinFolder(NATIVE_MEASURES).withFormatter(INT_FORMATTER).publish(c);

Copper.timestamp()

.withinFolder(NATIVE_MEASURES)

.withFormatter(TIMESTAMP_FORMATTER)

.publish(c);

})

.asQueryCube()

.withClusterDefinition()

.withClusterId(CLUSTER_ID)

.withMessengerDefinition()

.withProtocolPath("jgroups-protocols/protocol-tcp.xml")

.end()

.withApplication(APPLICATION_ID)

.withDistributingLevels(new LevelIdentifier("Time", "HistoricalDates", "AsOfDate"))

.end()

.withEpochDimension()

JGroups

In the previous example, we defined the configuration of a messenger (see withMessengerDefinition).

Each cube, whether it is a data or a query cube, comes with its own messenger.

A messenger allows for the discovery of the different members of the cluster, so that the cubes can interact with each other. It also keeps an up-to-date list of members within a cluster. When a machine is turned off, it is removed from the cluster.

For messengers to work, Atoti relies on JGroups for membership detection and join/left/shutdown cluster nodes notifications.

We provide two JGroups configuration files in the sandbox. Both are only intended for development purposes with all cubes running on the same server/computer, and are not fit for production.

The production configurations highly depend on the cubes' locations and should be modified accordingly.