Analysis Hierarchies

The concept of this page is an advanced concept of ActivePivot and should only be tackled once the reader is familiar with basic OLAP concepts and the associated terminology.

The reader of this document is notably expected to be familiar with the notions of Hierarchies and Locations.

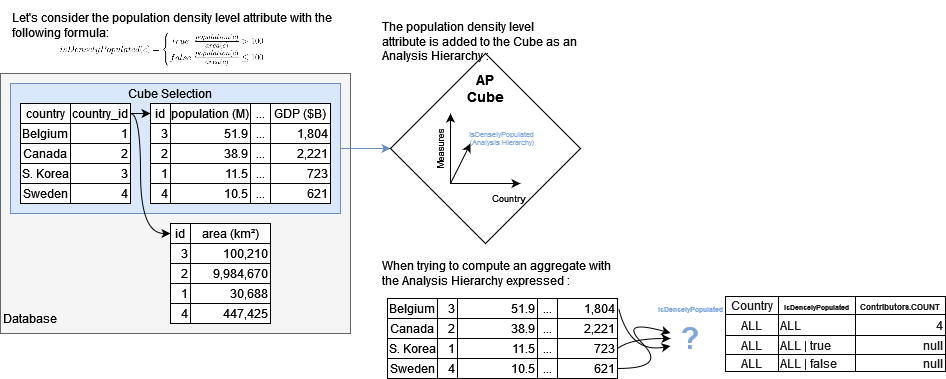

Analysis Hierarchies are a concept that allows for an ActivePivot cube to define analytic attributes/levels with a larger degree of freedom : This allows for the definition of attributes (later used in the hierarchical structure of the cube) in a functional way, and does not require these attributes to be based on one of the database fields.

Concept definition and specificities

We call Analysis Hierarchy any hierarchy of an ActivePivot cube containing at least one level whose members are not created using the cube's schema fact selection fields.

This naming convention is to be opposed to "standard Hierarchies" that are used for binding the database's records to the cube's aggregates. The members of a level in a standard hierarchy are by definition the values of the field that was associated to it.

A Hierarchy is often be assumed to be a standard Hierarchy unless stated otherwise. However, both Analysis Hierarchies and standard Hierarchies are hierarchies of the cube in a structural fashion.

The values of the members of an Analysis Hierarchy are programmatically defined, and can be either static of dynamic. The lack of constraints on the definition allows for a functional definition for its levels, which for example can be based on business requirements rather than the existing data within the database.

Analysis Hierarchies are therefore a powerful tool that allows for the integration of programmatically-defined members to the hierarchical structure of the cube, thus reinforcing the cube's analytic capabilities through the addition of functionally-defined attributes as the hierarchies' levels.

Usage Examples

Here are two use-cases for Analysis Hierarchies :

Introduction of a parameter for a subset of measures

If one's measure definition is parameterized by a static parameter, and said measure is to be visualized for multiple parameter values at the same time, then the attribute corresponding the parameter can be added as a level of an analysis hierarchy, with the parameter values as the level members.

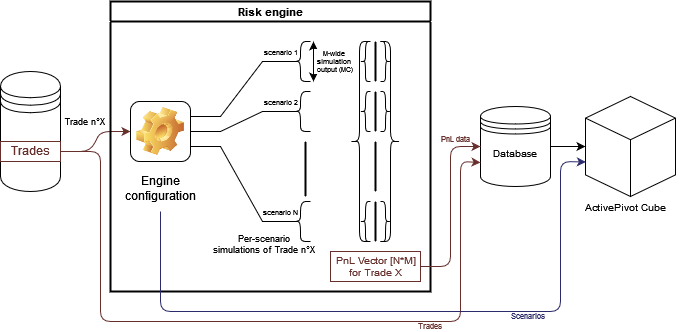

Ex : PnL exploration for VaR breach scenario

When estimating market risk through the computation of the VaR metrics, one of the methods is to use scenario-backed Monte-Carlo simulations. In that case, risk simulations will be stored in the database inside scenario-indexed vectors for improving the computation efficiency of the VaR metrics, so the scenario attribute is not explicitly available through the data, and thus cannot be used as the level of a standard Hierarchy. However, there are various business cases where some metrics are to be analyzed on a per-scenario basis, for example when analyzing why such scenario leads to a VaR increase, or when back testing the VaR model, since this allows to inspect which scenario was the closest to an actual VaR breach.

Therefore, adding an Analysis Hierarchy with the "Scenario" attribute inside a VaR cube will allow to retain the computational optimization of the VaR metrics through vectorization while keeping the database model intact, while allowing for the analysis of some specific metrics per scenario.

Introduction of an additional analysis level

A recurrent need when analyzing complex data is to create, use, and manipulate attributes corresponding to several groupings of the facts following a functional logic that is not available in the database records.

Introducing an Analysis Hierarchy along with a Procedure defining the functional relationships between the facts and the groupings (called Aggregation Procedure) allows for the definition of a fully customizable hierarchy whose members correspond to the desired functional logic, without affecting the database at all.

Ex : Sliding temporal buckets in Liquidity

In liquidity, trade consolidation dates are often grouped in buckets, on which some computations may depend.

The addition of the matching bucket as a pre-computed field of the base through the ETL is not always a satisfying solution as the buckets will be statically stored in that case, when the user can expect the buckets to be computed relative to the time of the analysis, rather than at the time data was inserted. In some cases, individual trades may move to other buckets between those two times, so the analysis would be incorrect.

Adding the "Time Bucket" attribute to the cube through an Analysis Hierarchy allows for a computation of the bucket matching trades at the time of the query, making the query output aligned with the expectations of the user.

Note : Defining a field through the ETL or the sources' calculated column creation methods and using that field as part of a standard hierarchy is the preferred method for defining bucketing attributes.

Analysis Hierarchies should be considered for bucketing when the functional definition of the buckets requires information that cannot be inferred when the data is inserted in the database (Here we need the query time in order to compute the temporal bucket).

Using Analysis hierarchies

Regarding the user of the Cube, an Analysis Hierarchy will have the same behavior as a standard Hierarchy with the notable exception that:

When the cube is queried:

- Expressing the Location on an Analysis Hierarchy on its first level will lead to the replication of the values obtained through the aggregation of the cube facts and the execution of the required post-processors for each of the non-filtered members of the first level of the analysis hierarchy.

- Expressing the Location on an Analysis Hierarchy beyond its first level will always return an empty result for aggregated measures.

These limitations are the direct consequence of the fact that it is no longer possible to infer the relationship

between the records and the aggregates at the queried location if the expressed member on the analysis hierarchy

is not a top level member:

While this limitation seems to reduce the usability of Analysis Hierarchies, there are two ways to work around this limitation and leverage analysis hierarchies as a powerful analysis tool:

- Define post-processed measures explicitly handling the expressed Analysis Hierarchy member: by prefetching the location without any expressed analysis hierarchy and by defining the behavior regarding the expressed Analysis Hierarchy members, it is possible to specify values returned by the post-processor.

- Define a global behavior regarding facts : If, for any record of the database, there is one and only one member of the Analysis Hierarchy for which we want to match the facts to, then it is possible to redefine the logical relationship between facts and aggregates at any aggregation level of the cube. This can be done by implementing an "Aggregation Procedure" and associating it with the analysis hierarchy when defining the cube structure.

Analysis Hierarchies and Copper API

The Copper API allows for the functional declaration of join-based hierarchies or bucketing hierarchies. Both are analysis hierarchies, and come out of the box with their matching aggregation procedures.

Introspecting analysis hierarchies

Analysis hierarchies can have its top levels corresponding to selection fields (like in a standard hierarchy) and its deepest levels defined programmatically (like in an analysis hierarchy).

Such an analysis hierarchy is called "introspecting" analysis hierarchy.

Although the members of the hierarchies are partially matching records, this type of hierarchy is still an Analysis Hierarchy and the above-mentioned specificities still apply here.

Defining an analysis hierarchy in a cube

The addition of an analysis hierarchy inside the structure of a cube is done during its definition like standard hierarchies.

The ActivePivot library API provides cube definition fluent builders; adding an Analysis Hierarchy through that API is done via the withAnalysisHierarchy(String hierarchyName, String pluginKey) method.

Note : In order to properly add an analysis hierarchy, one must ensure that the

IPluginValuesIAnalysisHierarchyandIAnalysisHierarchyDescriptionProviderwith a matching pluginKey were added to ActivePivot'sRegistry

Example :

final IActivePivotInstanceDescription cubeDescription =

StartBuilding.cube("Cube")

.withSingleLevelDimension("id")

.withDimension("dimension")

.withAnalysisHierarchy("analysisHierarchy_example", StringAnalysisHierarchy.PLUGIN_KEY)

.build();

This example defines a cube named "Cube" along with:

- The "id" dimension containing a single standard hierarchy named "id" whose only level, also named "id", contains members matching the values of the "id" field in the cube's selection of the database.

- The "dimension" dimension containing an analysis hierarchy named "analysisHierarchy_example" for which:

- The member data and logic is defined by

IAnalysisHierarchyimplementation with theStringAnalysisHierarchy.PLUGIN_KEYplugin key. - The structure description is defined by

IAnalysisHierarchyDescriptionProviderimplementation with theStringAnalysisHierarchy.PLUGIN_KEYplugin key.

- The member data and logic is defined by

Customizing an analysis hierarchy during its definition

By default, the properties and the structure of an Analysis Hierarchy are defined by the matching IAnalysisHierarchyDescriptionProvider

implementation. It is however possible to greatly customize an analysis hierarchy description by using the withCustomizations(Consumer<IHierarchyDescription>) method

when adding it to the cube description.

Reusing the previous example, we now have:

final IActivePivotInstanceDescription cubeTwoDescription =

StartBuilding.cube("Cube2")

.withSingleLevelDimension("id")

.withDimension("dimension")

.withAnalysisHierarchy("stringAH", StringAnalysisHierarchy.PLUGIN_KEY)

.withCustomizations(

desc -> {

desc.setName("Customized Hierarchy"); // Renames the AH

desc.setAllMembersEnabled(false); // Makes the AH slicing

desc.putProperty("PropertyName", "PropertyValue");

// Adds the PropertyName:PropertyValue pair to the AH's properties

})

.build();

This example defines a cube named "Cube2" along with:

- The "id" dimension containing a single standard hierarchy named "id" whose only level, also named "id", contains members matching the values of the "id" field in the cube's selection of the database.

- The "dimension" dimension containing an analysis hierarchy effectively named "Customized Hierarchy" for which:

- The member data and logic is defined by

IAnalysisHierarchyimplementation with theStringAnalysisHierarchy.PLUGIN_KEYplugin key. - The structure description is defined by

IAnalysisHierarchyDescriptionProviderimplementation with theStringAnalysisHierarchy.PLUGIN_KEYplugin key, then altered by the customization method call.

- The member data and logic is defined by

Note : When altering the structure of the hierarchy, make sure that the resulting description is supported by the

IAnalysisHierarchyimplementation.

Implementing Analysis Hierarchies

Before considering implementing and using a custom Analysis Hierarchy, make sure that your use case effectively requires this solution:

- In some cases, data modification or replication in order to introduce an attribute in the database is perfectly acceptable.

- the Copper API already provides a functional API for defining Analysis Hierarchies for joins and bucketing use-cases, the generated hierarchies are backed by aggregation procedures, so there is no need for customizing measures and post-processors in order to use these hierarchies for data exploration.

When defining an analysis hierarchy, one must implement the following IPluginValues:

IAnalysisHierarchyresponsible for the definition of the hierarchy's members and the related logic.IAnalysisHierarchyDescriptionProviderresponsible for providing a default description of the Analysis Hierarchy.

Example - Scenario-based PnL extraction for parametric VaR

As presented before, in that use case, the user wishes to be able to view scenario-based metrics for one or several scenario.

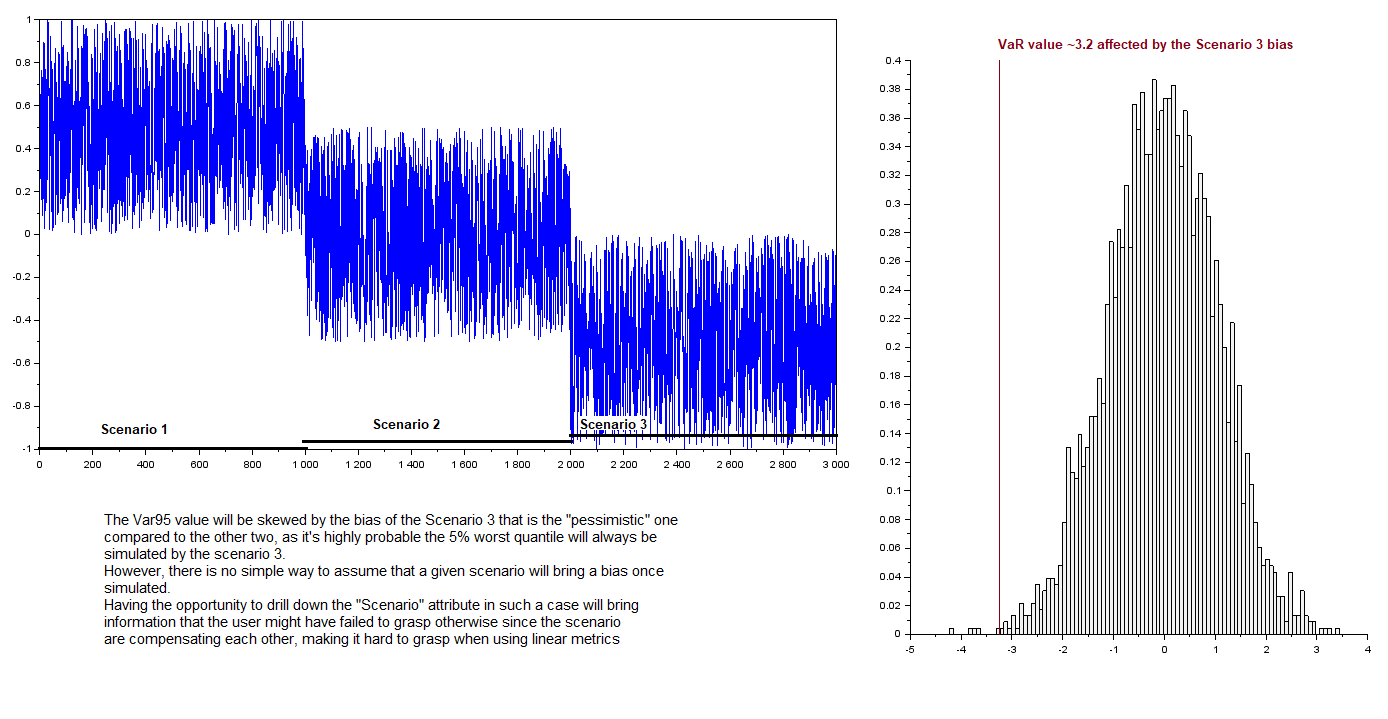

Here we consider the example of an extreme case where each scenario bring a constant bias in the output of the simulated data, with each scenario being "compensated" by an opposite scenario.

However, since the VaR is not a linear metric, the worst-case scenario may impact the value of the aggregated VaR as well as the metric value on a granular level.

This showcases the need for the ability to drill down between the output of the different scenario simulated by the risk engine.

We therefore wish to introduce a level corresponding to the "scenario" attribute, without modifying the database nor the vectorized data provided by the Risk Engine.

Analyzing scenario based metrics is only a subset of the actions performed by the risk analysts, who will likely still want to analyze VaR metrics at any business related aggregated level.

The "scenario" analysis hierarchy must therefore only affect analysis if the location is drilled-down at the scenario level by the user. Thus, the hierarchy will not be slicing and have an "ALL" top level, and its second level "Scenario" will contain the names of the scenario used in the VaR simulation.

The following listing is the implementation of the Analysis Hierarchy, with the appropriate members added when the hierarchy is instantiated. In the example, the member list is statically defined, but it is not a necessity.

Note that the listing extends

AAnalysisHierarchyV2rather than the base interfaceIMultiVersionAxisHierarchyas this abstract class is the recommended entry point for writing custom analysis hierarchies.

@QuartetExtendedPluginValue(intf = IMultiVersionHierarchy.class, key = AHForPnLExtract.PLUGIN_KEY)

public static class AHForPnLExtract extends AAnalysisHierarchyV2 {

protected Collection<String> SCENARIOS =

List.of("SCENARIO 1", "SCENARIO 2", "SCENARIO 3", "SCENARIO 1 EDITED");

public static final String PLUGIN_KEY = "scenarioHierarchy";

/**

* Constructor.

*

* @param info the info about the hierarchy

* @param levelInfos the info about the levels

* @param levelDictionaries the levels' dictionaries

*/

public AHForPnLExtract(

IAnalysisHierarchyInfo info,

List<ILevelInfo> levelInfos,

IWritableDictionary<Object>[] levelDictionaries) {

super(info, levelInfos, levelDictionaries);

}

@Override

public String getType() {

return PLUGIN_KEY;

}

@Override

protected Iterator<Object[]> buildDiscriminatorPathsIterator(IDatabaseVersion database) {

return SCENARIOS.stream().map(scenarioName -> new Object[] {null, scenarioName}).iterator();

}

}

Then, the default structure of the hierarchy is provided by the following

IAnalysisHierarchyDescriptionProvider implementation:

@QuartetPluginValue(intf = IAnalysisHierarchyDescriptionProvider.class)

public static class AHDescProviderForPnlExtract extends AAnalysisHierarchyDescriptionProvider {

public AHDescProviderForPnlExtract() {

super();

}

@Override

public String key() {

return AHForPnLExtract.PLUGIN_KEY;

}

@Override

public IAnalysisHierarchyDescription getDescription() {

final IAnalysisHierarchyDescription description =

new AnalysisHierarchyDescription(AHForPnLExtract.PLUGIN_KEY, "Scenario", true);

description.addLevel(new AxisLevelDescription("Scenario", null));

return description;

}

}

Let's consider a simple computation of the Averaged PnL defined by the following snippet :

Copper.sum("SimulatedPnLs")

.map(

(v, cell) ->

cell.writeDouble(v.readVector(0).sumDouble() / v.readVector(0).size()),

ILiteralType.DOUBLE)

.as("averaged simulated pnL")

Here is the output when querying the "Averaged simulated pnL" measure on the Grand Total of the cube and

as well as the Total drilled-down per scenario :

| Trade | Desks | Trader | Scenario | Averaged simulated pnL |

|---|---|---|---|---|

| AllMember | AllMember/AllMember | AllMember | 0.437 | |

| AllMember | AllMember/AllMember | AllMember | SCENARIO 1 | null |

| AllMember | AllMember/AllMember | AllMember | SCENARIO 1 EDITED | null |

| AllMember | AllMember/AllMember | AllMember | SCENARIO 2 | null |

| AllMember | AllMember/AllMember | AllMember | SCENARIO 3 | null |

Although the correct scenario members are showed in the output cellSet, the measure in not computed as the proper way to drill down the scenario had not yet been defined.

Add post-processed measures handling the Analysis Hierarchy drill-down

In order to properly use the analysis hierarchy as a tool for the data exploration, Scenario-based metrics must be defined and implemented:

The user wishes to simply obtain the aggregated PnL figures for a given expressed scenario, and the consolidated sum for all scenarios if none is specified.

Since the sum is a linear operator, the extraction can be done at any level of aggregation, we can therefore compute the extracted aggregates simply from the values of the complete PnL vector at the queried location:

- If the scenario is expressed in the query extract the subVector of PnL simulations matching the scenario

- Otherwise, keep the complete PnL simulation vector :

Here is the implementation of a post-processor that performs as presented

@QuartetExtendedPluginValue(intf = IPostProcessor.class, key = pnlExtractorPP.PLUGIN_KEY)

public static class pnlExtractorPP extends ADynamicAggregationPostProcessor {

public static final String PLUGIN_KEY = "plnExtractor";

/** Constructor. */

public pnlExtractorPP(String name, IPostProcessorCreationContext creationContext) {

super(name, creationContext);

}

@Override

protected void evaluateLeaf(

ILocation leafLocation, IRecordReader underlyingValues, IWritableCell resultCell) {

final int scenarioLevelCoordinate =

pivot.getAggregateProvider().getHierarchicalMapping().getCoordinate(4, 1);

final ILevelInfo levelInfo =

pivot

.getAggregateProvider()

.getHierarchicalMapping()

.getLevelInfo(scenarioLevelCoordinate);

if (LocationUtil.isAtOrBelowLevel(leafLocation, levelInfo)) {

// The scenario level is expressed

final String scenarioValue = (String) LocationUtil.getCoordinate(leafLocation, levelInfo);

switch (scenarioValue) {

case "SCENARIO 1":

resultCell.write(((IVector) underlyingValues.read(0)).subVector(0, 500));

break;

case "SCENARIO 2":

resultCell.write(((IVector) underlyingValues.read(0)).subVector(500, 500));

break;

case "SCENARIO 3":

resultCell.write(((IVector) underlyingValues.read(0)).subVector(1_000, 500));

break;

case "SCENARIO 1 EDITED":

resultCell.write(((IVector) underlyingValues.read(0)).subVector(1_500, 500));

break;

default:

throw new IllegalStateException("Unexpected scenario.");

}

} else {

resultCell.write(underlyingValues.read(0));

}

}

@Override

public String getType() {

return PLUGIN_KEY;

}

@Override

protected boolean supportsAnalysisLevels() {

return true;

}

}

Note : Once a post-processed measure handling the Analysis Hierarchy is created and defined in the cube, it can be reused via the Copper API when defining new measures. The hierarchy description must however contain the

IAnalysisHierarchy.LEVEL_TYPES_PROPERTYproperty with the appropriate value following the"levelName1:type1,levelName2:type2"convention.

We can now add measures to the cube with the following snippet :

Copper.sum("SimulatedPnLs").as("sum").publish(ctx);

Copper.newPostProcessor(pnlExtractorPP.PLUGIN_KEY)

.withProperty(AAdvancedPostProcessor.UNDERLYING_MEASURES, "sum")

.withProperty(AAdvancedPostProcessor.ANALYSIS_LEVELS_PROPERTY, "Scenario")

.withProperty(AAdvancedPostProcessor.OUTPUT_TYPE, ILiteralType.DOUBLE_ARRAY)

.withProperty(

ADynamicAggregationPostProcessor.LEAF_TYPE, ILiteralType.DOUBLE_ARRAY)

.as("PnLExtractedScenario")

.publish(ctx);

Copper.measure("PnLExtractedScenario")

.map(

(v, cell) ->

cell.writeDouble(v.readVector(0).sumDouble() / v.readVector(0).size()),

ILiteralType.DOUBLE)

.as("averaged simulated pnL with Scenario drillDown")

Similarly to the previous section, we can query the "averaged simulated pnL with Scenario drillDown" measure

on the Grand Total and the Total drilled down per scenario locations :

| Trade | Desks | Trader | Scenario | Averaged simulated pnL with Scenario drillDown |

|---|---|---|---|---|

| AllMember | AllMember/AllMember | AllMember | 0.437 | |

| AllMember | AllMember/AllMember | AllMember | SCENARIO 1 | -19999.262 |

| AllMember | AllMember/AllMember | AllMember | SCENARIO 1 EDITED | 19998.173 |

| AllMember | AllMember/AllMember | AllMember | SCENARIO 2 | -9997.965 |

| AllMember | AllMember/AllMember | AllMember | SCENARIO 3 | 10000.803 |

In this example, the expansion on the Scenario level showcases a strong bias related to the scenarios that would be very hard to analyze otherwise.

Misc implementation topics

Migrating to 6.0.X

The 6.0.0 version of ActivePivot introduced a new interface IAnalysisHierarchyDescriptionProvider whose purpose

is explicit : Providing a description of the analysis hierarchy.

That responsibility is removed from the AAnalysisHierarchy class contract,

which used to be the default provided base for IAnalysisHierarchy implementations.

The following methods describing the hierarchy and its structure are now deprecated:

AAnalysisHierarchy.getLevelsCount()AAnalysisHierarchy.getLevelComparator(int ordinal)AAnalysisHierarchy.getLevelField(int ordinal)AAnalysisHierarchy.getLevelType(int ordinal)AAnalysisHierarchy.getLevelName(int ordinal)AAnalysisHierarchy.getLevelFormatter(int ordinal)The definition of these attributes of the analysis hierarchy is now expected to be provided in the

IAnalysisHierarchyDescriptionreturned by theIAnalysisHierarchyDescriptionProvider#getDescription()implementation with the same plugin key as the Analysis Hierarchy.

Migration Example

Let's consider a multi-level analysis hierarchy with a static set of members and a custom comparator on the second level:

@QuartetExtendedPluginValue(

intf = IMultiVersionHierarchy.class,

key = StringAnalysisHierarchyOld.PLUGIN_KEY)

public static class StringAnalysisHierarchyOld extends AAnalysisHierarchy {

private static final long serialVersionUID = 20191122_02L;

public static final String PLUGIN_KEY = "STATIC_STRING";

protected final List<Object> levelOneDiscriminators;

protected final List<Object> levelTwoDiscriminators;

/**

* Default constructor.

*

* @param hierarchyInfo information about this hierarchy

*/

public StringAnalysisHierarchyOld(

IAnalysisHierarchyInfo hierarchyInfo,

List<ILevelInfo> levelInfos,

IWritableDictionary<Object>[] dictionaries) {

super(hierarchyInfo, levelInfos, dictionaries);

this.levelOneDiscriminators = Arrays.asList("A", "B");

this.levelTwoDiscriminators = Arrays.asList("X", "Y", "Z");

}

@Override

public String getType() {

return PLUGIN_KEY;

}

@Override

public int getLevelsCount() {

return this.isAllMembersEnabled ? 3 : 2;

}

@Override

public IComparator<Object> getLevelComparator(int levelOrdinal) {

// The second level is expected to use the reverse natural order

if (levelOrdinal == 2) {

return new ReverseOrderComparator();

} else {

return new NaturalOrderComparator();

}

}

@Override

protected Iterator<Object[]> buildDiscriminatorPathsIterator(IDatabaseVersion database) {

final List<Object[]> resultList = new ArrayList<>();

for (Object lvlOneDiscriminator : levelOneDiscriminators) {

for (Object lvlTwoDiscriminator : levelTwoDiscriminators) {

final Object[] path = new Object[getLevelsCount()];

path[path.length - 2] = lvlOneDiscriminator;

path[path.length - 1] = lvlTwoDiscriminator;

resultList.add(path);

}

}

return resultList.iterator();

}

}

The implementation for this hierarchy post 6.0 will be :

// Could extend ObjectAnalysisHierarchy when AAnalysisHierarchy will be replaced by

// AAnalysisHierarchyV2

@QuartetExtendedPluginValue(

intf = IMultiVersionHierarchy.class,

key = StringAnalysisHierarchy.PLUGIN_KEY)

public static class StringAnalysisHierarchy extends AAnalysisHierarchyV2 {

public static final String PLUGIN_KEY = "STATIC_STRING";

protected final List<Object> levelOneDiscriminators;

protected final List<Object> levelTwoDiscriminators;

/**

* Default constructor.

*

* @param hierarchyInfo information about this hierarchy

*/

public StringAnalysisHierarchy(

IAnalysisHierarchyInfo hierarchyInfo,

List<ILevelInfo> levelInfos,

IWritableDictionary<Object>[] levelDictionaries) {

super(hierarchyInfo, levelInfos, levelDictionaries);

this.levelOneDiscriminators = Arrays.asList("A", "B");

this.levelTwoDiscriminators = Arrays.asList("X", "Y", "Z");

}

@Override

protected Iterator<Object[]> buildDiscriminatorPathsIterator(IDatabaseVersion database) {

final List<Object[]> resultList = new ArrayList<>();

for (Object lvlOneDiscriminator : levelOneDiscriminators) {

for (Object lvlTwoDiscriminator : levelTwoDiscriminators) {

final Object[] path = new Object[getLevelsCount()];

path[path.length - 2] = lvlOneDiscriminator;

path[path.length - 1] = lvlTwoDiscriminator;

resultList.add(path);

}

}

return resultList.iterator();

}

@Override

public String getType() {

return PLUGIN_KEY;

}

}

for the AMultiVersionAnalysisHierarchy implementation

@QuartetPluginValue(intf = IAnalysisHierarchyDescriptionProvider.class)

public static class StringAnalysisHierarchyDescriptionProvider

extends AAnalysisHierarchyDescriptionProvider {

@Override

public IAnalysisHierarchyDescription getDescription() {

final var desc = new AnalysisHierarchyDescription(key(), "stringHierName");

final var levelDesc = new AxisLevelDescription(STATIC_STRING, null);

final var levelDescTwo = new AxisLevelDescription(STATIC_STRING + "_1", null);

levelDescTwo.setComparator(new ComparatorDescription(ReverseOrderComparator.type));

desc.addLevel(levelDesc);

desc.addLevel(levelDescTwo);

return desc;

}

@Override

public String key() {

return TestAnalysisHierarchyCompatibility.StringAnalysisHierarchy.PLUGIN_KEY;

}

}

for the description provider implementation.