Application Performance Monitoring

ActivePivot APM (Application Performance Monitoring) is a solution for monitoring the healthiness and performance of ActivePivot instances. It provides several features easing the support work, and reducing the burden of maintaining and troubleshooting ActivePivot.

ActivePivot APM provides the following main features:

- Distributed log tracing: Allows to easily identify all the logs related to an operation (e.g. query execution) across nodes

- Query monitoring: Monitor in-progress, successful or failed MDX queries, their associated execution logs, and the historical performances

- Cube size and usage monitoring: Display the trend of cube aggregates provider sizes over time.

- Datastore monitoring: Display the trend of datastore sizes over time; Monitor the datastore transaction times; View successful and failed CSV loading, and their associated execution logs.

- Netty monitoring: Monitor the size of data transferred between nodes, in terms of single query execution, all queries executed by a user, or all activities, etc.

- Monitoring activities by user: Monitor activities (e.g. query execution) on a user level.

- JVM monitoring: Overall JVM status, including CPU, heap and off-heap memory, threads, GC, etc.

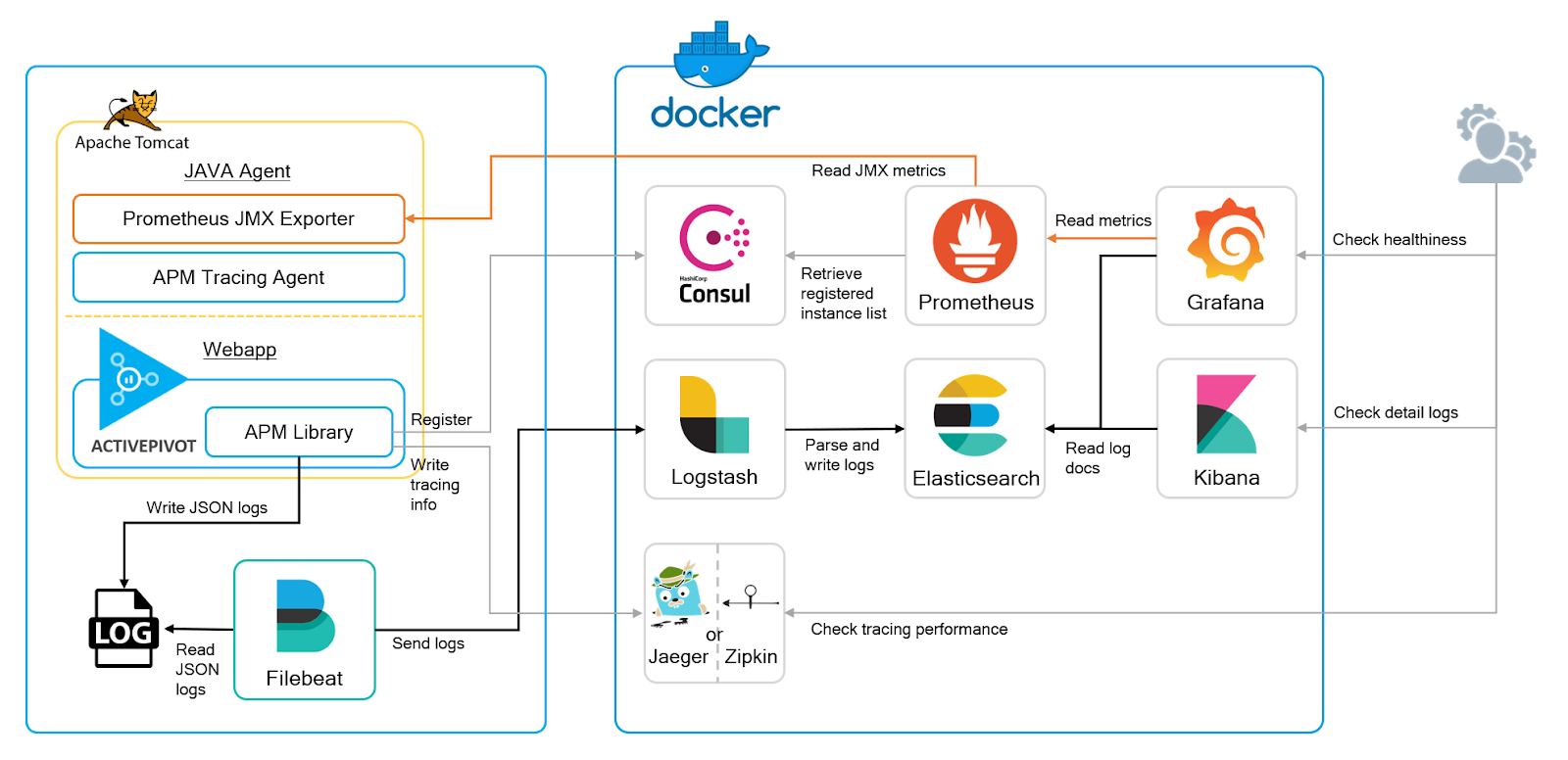

APM is built with a number of open-source tools. Below is a high-level illustration of an end-to-end monitored Activepivot relying on APM library, additional monitoring tools and their interaction.

Configuration

Adding APM in an ActivePivot project

The APM library relies on Extended Plugins or Types overriding to extend core component with monitoring capabilities. In order to load monitoring classes, grab the APM jar and add it as a dependency of your project

<dependency>

<groupId>com.activeviam.apm</groupId>

<artifactId>apm</artifactId>

<version>version of activepivot</version>

</dependency>

List of Extended Plugins

Please find the list of extended plugins provided into the APM library

| Class name | Plugin Key | Core class | Description |

|---|---|---|---|

MonitoredActivePivotQueryExecutor | BASE | ActivePivotQueryExecutor | Replacing the default ActivePivotQueryExecutor with adding ability to monitor query execution |

MonitoredMDXStream | MDX | MdxStream | MdxStream with monitoring ability. Also append trace id to the error message to be displayed on ActiveUI |

LogNettyMessenger | NETTY | NettyMessenger | Override to add more logging when a query is sent and when we receive the results |

MonitoredSynchronousAggregatesContinuousQueryEngine | SYNC | SynchronousAggregatesContinuousQueryEngine | Overrides with extra logs on event handling and tracing capability covering all the subsequence activities |

TraceDataInstanceDistributionManager | DATA_DISTRIBUTION_MANAGER | DataInstanceDistributionManager | Enable the tracing when receiving distributed transaction commit command |

Monitored Spring Configuration

APM library relies on Spring BeanPostProcessor to automatically customize and add monitoring capabilities within

ActivePivot configuration classes.

Additionally, the library offers additional configuration classes detailed in the following table:

| Spring Config | Description |

|---|---|

MonitoredDataLoadingConfig | Add the configuration of the IMessageHandler as well a REST service to check on the file laoding status. Check more in the "Data Loading" chapter |

MonitoringJmxConfig | Exposes some MBeans related to the previously mentioned monitored services. |

QueryPerformanceEvaluatorConfig | Enable query performance evaluation and slow query detection |

TracingRestServiceConfig | If you want to expose a service allowing clients (e.g. ActiveUI) to forward client traces to the tracing server |

TracingConfig | Enable and configure log tracing capabilities. It also includes the aforementioned BeanPostProcessors allowing to customize ActivePivot core configuration classes to support monitoring |

ExtraConfig | Add the configuration bringing additional logging (e.g. environment properties) |

ConsulServiceConfig | (Optional) Service discovery configuration using Consul server |

In case there is a need to wait for the APM stack to be started before triggering some action, one can use the following Spring annotation on the required bean:

@DependsOn(value = TracingConfig.APM_TRACING)

Logging

APM relies on SLF4J with a Logback binding for the logging part.

ActivePivot IHealthEventHandler monitoring

The ActivePivot core code provides some monitoring features through health events.

APM defines a specific QuartetType for the IHealthEventHandler class which overrides all the underlying health event handlers to make sure they are printed properly to SLF4J.

Additionally, ActivePivot core forces the logs generated by the ILoggingHealthEventHandler implementations to be under the same logger name (com.activeviam.health.monitor.ILoggingHealthEventHandler).

We do not think the best approach as it could be necessary, in some occasion, to split the logs into different files. This is why the library contains the following overrides:

| Event Handler Interface | Description |

|---|---|

IActivePivotHealthEventHandler | Custom implementation MonitoredLoggingActivePivotHealthEventHandler which logs the messages into the logger com.activeviam.health.monitor.IActivePivotHealthEventHandler |

IComposerHealthEventHandler | Custom implementation MonitoredLoggingComposerHealthEventHandler which logs the messages into the logger com.activeviam.health.monitor.IComposerHealthEventHandler |

ICsvSourceHealthEventHandler | Custom implementation MonitoredLoggingCsvHealthEventHandler which logs the messages into the logger com.activeviam.health.monitor.impl.ICsvSourceHealthEventHandler |

IDatastoreHealthEventHandler | Custom implementation MonitoredLoggingDatastoreHealthEventHandler which logs the messages into the logger com.activeviam.health.monitor.IDatastoreHealthEventHandler |

IHealthEventHandler | New QuartetType MonitoredLoggingGlobalHealthEventHandler overriding the core LoggingGlobalHealthEventHandler class. It makes sure we are using the previously mentioned event handlers |

Configuration

Logback supports custom conversion specifiers allowing to display specific information properly within the logs. APM defines three custom converter detailed below:

| Converter | Description |

|---|---|

com.activeviam.apm.logging.impl.LogUserConverter | Allow to display the current user for the thread. The user is either taken from the security layer or from the health event |

com.activeviam.apm.logging.impl.LogThreadConverter | Allow to display the current thread. This is useful for the health event. This converter can override the default Logback one |

com.activeviam.apm.logging.impl.LogInstanceConverter | Required only if ELK setup and JSON logs are used. This converter provides the node instance name specified in property node.instance.name so the source node of a log can be identified in a centralized logging system like ELK |

Then, Logback configuration example can make use of these converters as follows:

<?xml version="1.0" encoding="UTF-8"?>

<!-- Example LOGBACK Configuration File http://logback.qos.ch/manual/configuration.html -->

<configuration>

<shutdownHook class="ch.qos.logback.core.hook.DelayingShutdownHook"/>

<jmxConfigurator/>

<!-- Exposing the conversion rules -->

<conversionRule conversionWord="thread"

converterClass="com.activeviam.apm.logging.impl.LogThreadConverter"/>

<conversionRule conversionWord="user"

converterClass="com.activeviam.apm.logging.impl.LogUserConverter"/>

<appender name="MAIN" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${custom.log.dir}/${project.artifactId}.log</file>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${custom.log.dir}/${project.artifactId}_%d{yyyy-MM-dd}.log.gz

</fileNamePattern>

<maxHistory>30</maxHistory>

<cleanHistoryOnStart>true</cleanHistoryOnStart>

</rollingPolicy>

<encoder>

<!-- Using the converter in the appender pattern layout -->

<pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} [Thread: %thread; User: %user] %-5level %logger{35} -

%msg%n

</pattern>

</encoder>

</appender>

[...]

<root level="INFO">

<appender-ref ref="MAIN"/>

</root>

<contextListener class="ch.qos.logback.classic.jul.LevelChangePropagator">

<resetJUL>true</resetJUL>

</contextListener>

</configuration>

If ELK setup is used, the library is ready for producing JSON logs for feeding Logstash and Elasticsearch. What needs to be done is to add an additional appender as below:

<?xml version="1.0" encoding="UTF-8"?>

<!-- Example LOGBACK Configuration File http://logback.qos.ch/manual/configuration.html -->

<configuration>

<shutdownHook class="ch.qos.logback.core.hook.DelayingShutdownHook"/>

<jmxConfigurator/>

<conversionRule conversionWord="thread"

converterClass="com.activeviam.apm.logging.impl.LogThreadConverter"/>

<conversionRule conversionWord="user"

converterClass="com.activeviam.apm.logging.impl.LogUserConverter"/>

<conversionRule conversionWord="instance"

converterClass="com.activeviam.apm.logging.impl.LogInstanceConverter"/>

<appender name="JSON" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${custom.json.log.dir}/activepivot.log</file>

<rollingPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedRollingPolicy">

<fileNamePattern>${custom.json.log.dir}/activepivot_%d{yyyy-MM-dd}.%i.log.gz</fileNamePattern>

<maxFileSize>1GB</maxFileSize>

<maxHistory>30</maxHistory>

<cleanHistoryOnStart>true</cleanHistoryOnStart>

</rollingPolicy>

<encoder class="net.logstash.logback.encoder.LogstashEncoder">

<!--

write the timestamp value as a numeric unix timestamp(number of milliseconds since unix epoch)

Check the link https://github.com/logstash/logstash-logback-encoder/tree/logstash-logback-encoder-5.2#customizing-timestamp

-->

<timestampPattern>[UNIX_TIMESTAMP_AS_NUMBER]</timestampPattern>

<!-- Exclude user name and thread name from MDC and standard fields to avoid duplication -->

<excludeMdcKeyName>user_name</excludeMdcKeyName>

<excludeMdcKeyName>thread_name</excludeMdcKeyName>

<fieldNames>

<timestamp>log_timestamp</timestamp>

<thread>[ignore]</thread>

</fieldNames>

<!-- Append customised fields -->

<provider class="net.logstash.logback.composite.loggingevent.LoggingEventPatternJsonProvider">

<pattern>

{

"user_name": "%user",

"thread_name": "%thread",

"instance_name": "%instance"

}

</pattern>

</provider>

</encoder>

</appender>

[...]

<root level="INFO">

<appender-ref ref="JSON"/>

</root>

<contextListener class="ch.qos.logback.classic.jul.LevelChangePropagator">

<resetJUL>true</resetJUL>

</contextListener>

</configuration>

Loggers Categorization

APM loggers could be categorized as follows:

| Category | Logger(s) |

|---|---|

| Feeding | com.activeviam.apm.sourcecom.activeviam.health.monitor.impl.ICsvSourceHealthEventHandlercom.activeviam.health.monitor.IDatastoreHealthEventHandler |

| Feed loading | com.activeviam.apm.loaded.feedUsed if you choose the feed.loading.message.handler.type option 'LOG'. In this case you more likely prefer the output to be made in a separate file |

| Queries | com.activeviam.apm.pivotcom.activeviam.apm.tracingcom.activeviam.apm.webcom.activeviam.health.monitor.IActivePivotHealthEventHandler |

| Distribution | com.activeviam.apm.messenger |

| Health Agent | com.activeviam.health.monitor.IComposerHealthEventHandler |

| CXF services | org.apache.cxf.services, check the doc |

CXF

Some extra logging is also configured to make sure we can output all the requests and responses made through CXF.

To ensure that all the services are properly covered, the configuration MonitoredActivePivotWebServicesConfig.class must be imported in the Spring Config class.

Data loading

The MonitoredDataLoadingConfig class provides the bean messageHandler() which creates the implementation of the IMessageHandler.

In your source Spring config class you could do the following:

...

@Autowired

private MonitoredDataLoadingConfig monitoredDataLoadingConfig;

@Bean

protected IMessageHandler<IFileInfo<Path>> messageHandler() {

return monitoredDataLoadingConfig.messageHandler();

}

@Bean(destroyMethod = "close")

public CustomCSVSource dataCSVSource() {

final CSVSource csvSource = new TraceableCSVSource(DATA_TAG);

...

return csvSource;

}

protected List<IStoreMessageChannel<IFileInfo<Path>, ILineReader>> dataCSVChannels(

final CSVMessageChannelFactory<Path> dataCSVChannelFactory,

final IMessageHandler<IFileInfo<Path>> messageHandler) {

final List<IStoreMessageChannel<IFileInfo<Path>, ILineReader>> channels = new ArrayList<>();

ITuplePublisher<IFileInfo<Path>> tuplePublisher = ...;

IStoreMessageChannel<IFileInfo<Path>, ILineReader> channel = dataCSVChannelFactory.createChannel(topicName, storeName, tuplePublisher);

channel.withPublisher(tuplePublisher);

channel.withMessageHandler(messageHandler());

channels.add(channel);

}

Depending on the properties, the bean allows to:

- log start/end processing of some input data, as well as the errors, through the logger

com.activeviam.apm.source - log the rejections of the input data through the logger

com.activeviam.apm.source.rejection - specify the property

feed.loading.message.handler.typeto select the type of the message handler: if set toDEFAULT, simply logs detailed information about loading file if set toLOG, logs the feed loading in an external file (CSV style) upon completion using the loggercom.activeviam.apm.loaded.feed** if set toWEBHOOK, call a webhook (external service) upon completion of the file loading

The import of the Spring Config class

MonitoredDataLoadingConfigimplicitly brings the Spring ConfigMonitoredDataLoadingCacheConfigandMonitoredDataLoadingRestServicesConfig.

MonitoredDataLoadingCacheConfigcreates a cache allowing to keep all the files loaded as well as their status. It is used later on by the REST service to return the loaded status of a file.MonitoredDataLoadingRestServicesConfigexposes a REST service allowing the get the loading status of a file. The service is accessible alongside the ActivePivot core RESTful services, through the endpoint/cube/apm/dataloading.

Tracing

APM tracing implementation relies on the openzipkin/brave library. If a Zipkin server is available, it can also serve for performance tracing.

Trace configuration is done by TracingConfig. Trace enablement for requests via Spring WebSocket are already handled by MonitoredActivePivotWebSocketServicesConfig.

To include tracing info in logs, logback configuration should include %X{traceId} and %X{spanId} in the pattern.

<encoder>

<pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} [%X{traceId}/%X{spanId}] [Thread: %thread; User: %user] %-5level %logger{35} - %msg%n</pattern>

</encoder>

You can also enable tracing for CSV Source loading, by simply replacing CSVSource instance with TraceableCSVSource. If you have your own CSV Source implementation which extends CSVSource, you can change it to extend TraceableCSVSource instead.

However, you should make sure your custom CSV Source have no conflict with what TraceableCSVSource overrides:

| Traceable Class | Parent Class | Description |

|---|---|---|

TraceableCSVSource | CSVSource | Method process is overridden to create and start a tracer. Method createParserContext is overrided to create instance of TraceableParserContext instead of ParserContext |

TraceableParserContext | ParserContext | Method processChannel is overridden to create and start a child tracer for the channel Method createChannelTask is overrided to create instance of TraceableChannelTask instead of ChannelTask |

TraceableChannelTask | ChannelTask | Method reportEndOfChannel is overridden to end the tracer for the channel |

You should make sure the TracingConfig is initialized before your source config, check the "Initialisation of APM Tracing" log. This has normally been done for you if you are using MonitoredDataLoadingConfig in your source config.

In some cases, you may need to enforce it by importing it with a higher priority Spring Config class:

@Autowired

@Qualifier(TracingConfig.APM_TRACING)

private Tracing tracing;

Spring Tracing filter

If you are using the WebApplicationInitializer, you will need to add the following to your class

@Override

protected void doOnStartup(final ServletContext servletContext) {

final DelegatingTracingFilter tracingFilter = new DelegatingTracingFilter();

final FilterRegistration.Dynamic tracing = servletContext.addFilter(Conventions.getVariableName(tracingFilter), tracingFilter);

tracing.addMappingForUrlPatterns(EnumSet.of(DispatcherType.REQUEST), true, "/*");

}

If you are using SpringBoot, you will need to add the following to your SpringBootApplication class

/**

* Adds Tracing Filter to Spring

*

* @return a FilterRegistrationBean for tracing

*/

@Bean

public FilterRegistrationBean<DelegatingTracingFilter> registrationDelegatingTracingFilter() {

final FilterRegistrationBean<DelegatingTracingFilter> registrationBean = new FilterRegistrationBean<>();

final DelegatingTracingFilter delegatingTracingFilter = new DelegatingTracingFilter();

registrationBean.setFilter(delegatingTracingFilter);

registrationBean.addUrlPatterns("/*");

return registrationBean;

}

Properties exposed

List of the properties exposed as well as their default value

| Property | Description | Default value |

|---|---|---|

activeviam.apm.config.hide.properties.pattern | Specifies the pattern used to hide the sensitivies properties when logged | *(password\|key).* |

activeviam.apm.enable.all.apmanager.statistics | Enable the MBeans statistics for the ActivePivot manager | true |

activeviam.apm.enable.xmla.servlet.logging | Enable the logging of the XMLA Servlet | true |

activeviam.apm.enable.json.queries.service.logging | Enable the logging of the JSON Queries Service | true |

activeviam.apm.enable.json.queries.service.detailed.logging | Enable the detailed logging of the JSON Queries Service | false |

activeviam.apm.enable.json.queries.service.log.memory.stats | Enable the logging of the memory stats for the JSON Queries Service | false |

activeviam.apm.enable.queries.service.logging | Enable the logging of the Queries Service | true |

activeviam.apm.enable.queries.service.detailed.logging | Enable the detailed logging of the Queries Service | false |

activeviam.apm.enable.queries.service.log.memory.stats | Enable the logging of the memory stats for the Queries Service | false |

activeviam.apm.enable.streaming.service.logging | Enable the logging of the Streaming Service | true |

activeviam.apm.enable.streaming.service.detailed.logging | Enable the detailed logging of the Streaming Service | false |

activeviam.apm.enable.streaming.service.log.memory.stats | Enable the logging of the memory stats for the Streaming Service | false |

activeviam.apm.enable.web.socket.logging | Enable the logging of Web Socket | true |

activeviam.apm.enable.web.socket.detailed.logging | Enable the detailed logging of the Web Socket | false |

activeviam.apm.enable.web.socket.log.memory.stats | Enable the logging of the memory stats for the Web Socket | false |

activeviam.apm.feed.loading.cache.loaded.files.num.entries | Property defining the number of entries kept in the feed loading cache | 1,000,000 |

activeviam.apm.feed.loading.message.handler.type | Property defining thefeed loading message handler type | DEFAULT |

activeviam.apm.feed.loading.message.handler.webhook.url | Property defining the webhook URL for the feed loading message handler | |

activeviam.apm.feed.loading.message.handler.webhook.num.retries | Property defining the number of retries for the webhook feed loading message handler | Integer.MAX_VALUE |

activeviam.apm.feed.loading.message.handler.webhook.time.to.wait | Property defining the time to wait (ms) between each retry for the webhook feed loading message handler | 3000 (3s) |

activeviam.apm.jmx.thread.status.cache.timeout | Thread status cache value timeout in milliseconds. Thread status cache value is refreshed automatically when timeout. | 60000 (60s) |

activeviam.apm.node.instance.name | Unique name of the current node. It must be provided if logging/monitoring stack (e.g. ELK) is used. | activepivot |

activeviam.apm.zipkin.server.url | URL to the Open Zipkin server endpoint for performance tracing | |

activeviam.apm.zipkin.span.level | Detail level of span. This mainly affect the detail level in performance tracing. It can be either DEFAULT, NODE or TASK. More details can be found on confluence. | DEFAULT |

activeviam.apm.consul.host | Host or IP of the consul service | |

activeviam.apm.consul.port | Port of the consul service | 8500 |

activeviam.apm.activepivot.service.name | Service name of the instance. This is for service grouping and filtering in consul | activepivot |

activeviam.apm.node.host | Host or IP of the node instance for registering to consul | The first non loop back host ip found in network interfaces |

activeviam.apm.node.healthcheck.port | Port which consul should call for healthcheck. This should be port used for jmx explorer, e.g. -javaagent:jmx_prometheus_javaagent-0.3.1.jar=8081 | 8081 |

activeviam.apm.node.healthcheck.path | Path which consul should call for healthcheck | metrics |

activeviam.apm.node.healthcheck.interval | Interval between health check attempts | 5m |

activeviam.apm.node.healthcheck.timeout | Timeout for a health check attempt | 30s |

activeviam.apm.node.healthcheck.deregister.time | Consul will de-register the instance from the registry if it loses contact for more that a specific time | 30m |

activeviam.apm.query.performance.evaluation.min.sample.size | The minimum amount of past performance required as samples for evaluation | 10 |

activeviam.apm.query.performance.evaluation.max.sample.size | The maximum amount of past performance taken into account for evaluation | 30 |

activeviam.apm.query.performance.evaluation.coefficient | The coefficient for adjusting the threshold | 1 |

activeviam.apm.query.performance.evaluation.elasticsearch.urls | URL(s) to Elasticsearch instance(s) that provides the historical performance. e.g. http://localhost:9200 | null |

activeviam.apm.query.performance.evaluation.elasticsearch.truststore.path | Path to the trust store that used to have encrypted communication with Elasticsearch | null |

activeviam.apm.query.performance.evaluation.elasticsearch.truststore.type | Type of the trust store to be loaded for encrypted communication with Elasticsearch | null |

activeviam.apm.query.performance.evaluation.elasticsearch.truststore.password | Password to open the trust store | null |

activeviam.apm.query.performance.evaluation.elasticsearch.username | Username for login Elasticsearch if authentication is required | null |

activeviam.apm.query.performance.evaluation.elasticsearch.password | Password for login Elasticsearch if authentication is required | null |